When we use SSIS to import files to Database, there is a very comm issues for most of user. How we can dynamically set the folder path and file filters for Foreach File Enumerator in a Foreach Loop. There are lots of website mentioned how to import different type files to database, how to loop folders and most of those website are hard code folder path and file filters. But for one of my project, I need to dynamically loop the folders and pick up file dynamically per parameter.

It is quite easy to implement the feature if you know the property Directory of the Foreach File Enumerator. Now let us look at the steps:

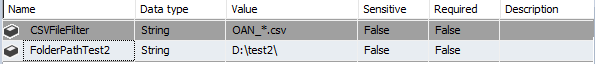

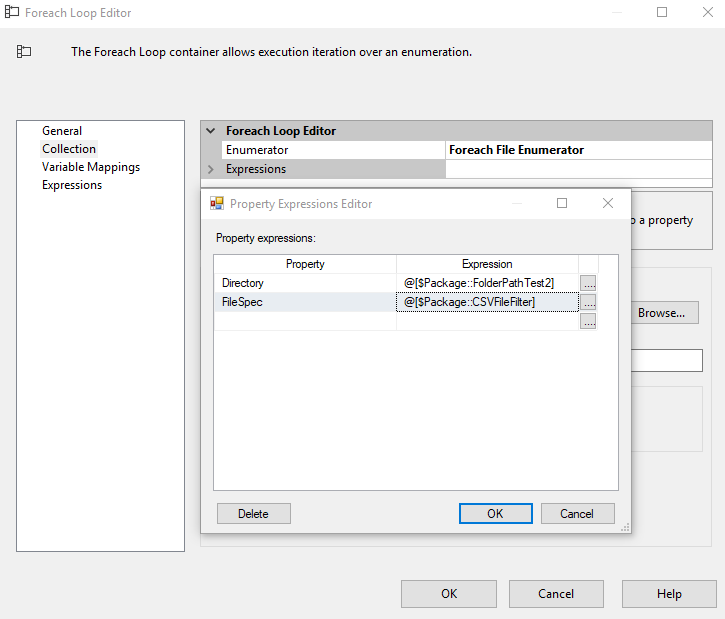

1. Create two SSIS package parameters CSVFileFilter and FolderPathTest2.

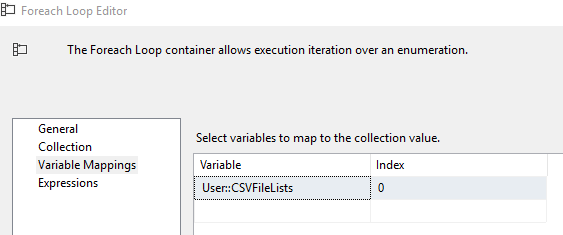

Create two variables CSVFileLists and CSVFileName

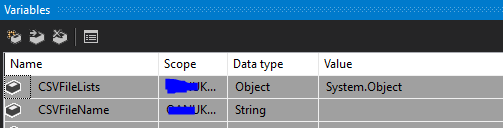

2. Create a Foreach Loop Container task to the Control Flow panel, double click it to pop up the property window.

3. Switch to the Collection tab, choose the Enumerator as “Foreach File Enumerator”, expand Expressions and add two properties “Directory” for folder path and “FileSpec” for filtering specific types of files. Specify “@[$Package::FolderPathTest2]” to Directory and ” @[$Package::CSVFileFilter]” to FileSpec.

4. Mapping the variable

5. Click OK to finished Foreach Loop Container configuration

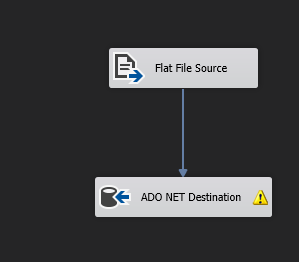

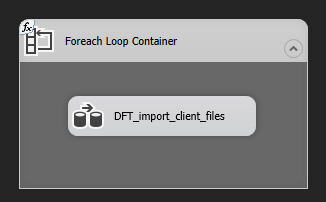

6. Add a Data Flow Task in the Foreach Loop Container

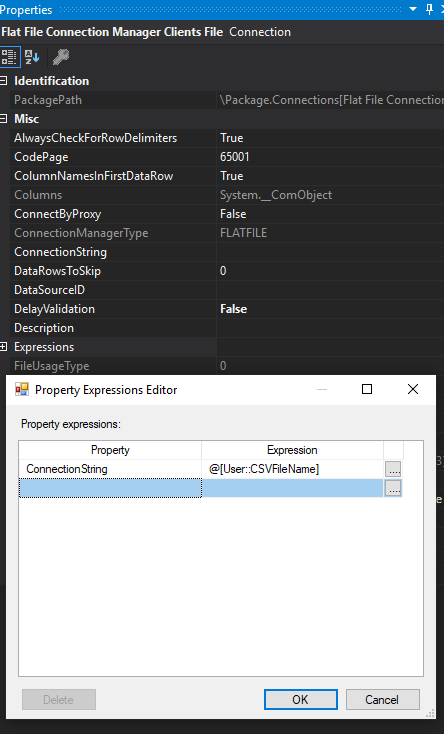

7. Add a Flat File Source inside the Data Flow Task,configure Flat File Source

8. Assign variable @[User::CSVFileName] ‘Flat File Connection Manager’ ConnectionString property

9. Add ADO NET Destination then we can dynamically import file to table now.